How do flaky tests fail?

definition

Flaky test, flake, noun

A test that passes and fails with the exact same code.

How is that even possible if we haven't changed a single line of code?

You will see flaky tests described as non-deterministic. But what does that even mean?

Flaky test failures just seem to be random. But if you really pay attention, flakiness depends on:

- interactions between components

- execution conditions

Interactions between components

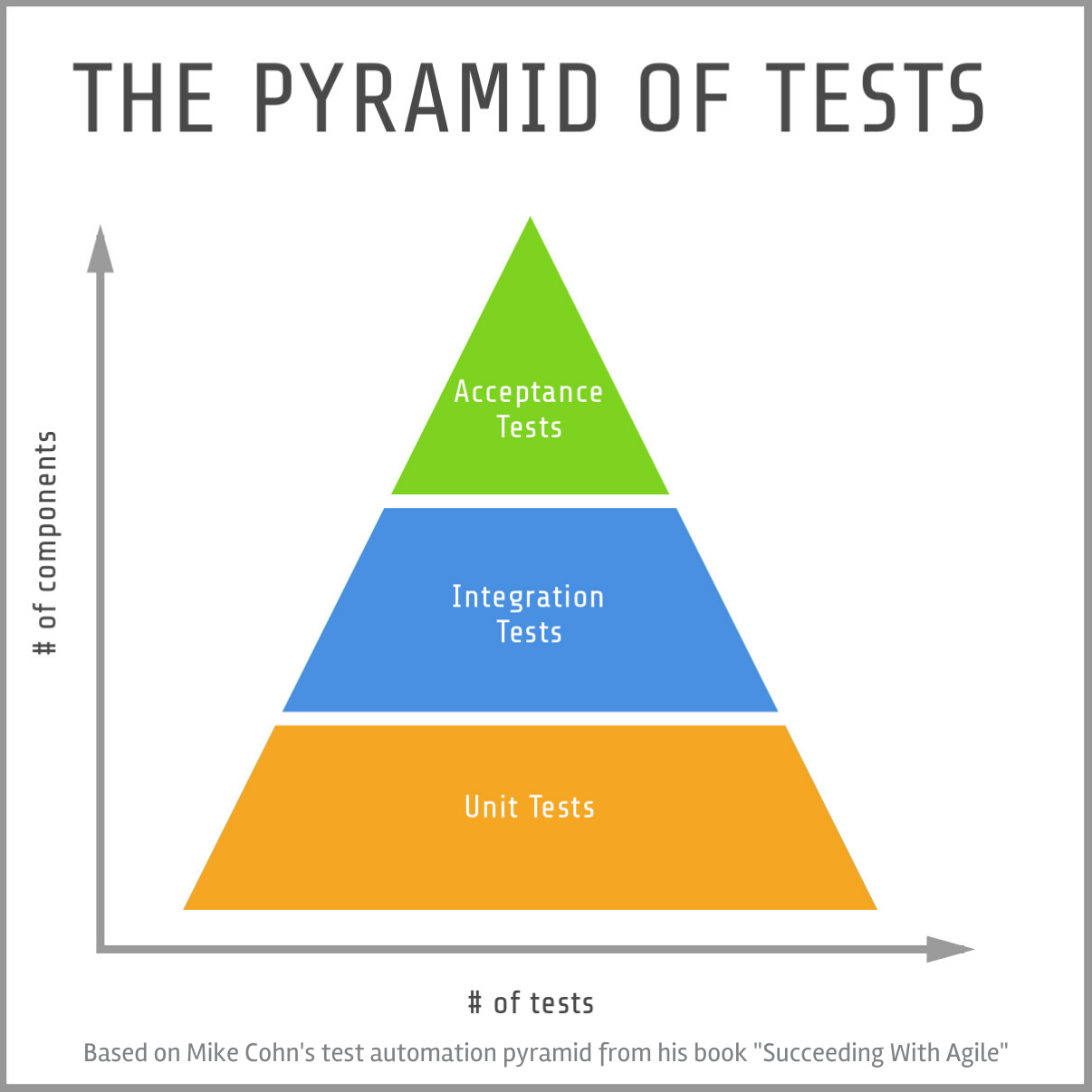

Chances are you are familiar with the Test Pyramid:

Components interacting

Here are the components that are usually involved for each test type:

| Unit test | Integration test | Acceptance test | |

|---|---|---|---|

| Test code | ✔ | ✔ | ✔ |

| Code under test | ✔ | ✔ | ✔ |

| Dependencies code | ✔ | ✔ | |

| Webdriver | ✔ | ||

| Browser | ✔ | ||

| Frontend code | ✔ | ||

| Backend code | ✔ |

The flakiness pyramid

The further up you are in the test pyramid, the more components are interacting, the more likely the test will be flaky.

In a Unit Test, there are no interactions apart from the test code and the module under test. You cannot make it simpler.

In an Integration Test, your module is interacting with its dependencies. The more dependencies, the more complex the test will be.

In an Acceptance Test (or System Test, or End-to-End Test), the whole application is involved, from the frontend to the database. Your test code might trigger a click on a frontend button using a browser driver (like Selenium Webdriver), that triggers an asynchronous HTTP request to your test server, which runs your backend code, which talks to the database.

As you can see, an Acceptance Test is much more complex than an Integration Test, which is more complex than a Unit Test.

Asynchronous interactions

When you move up the test pyramid, chances are that some of these components will be interacting asynchronously.

For example:

- an Ajax request from the client

- an asynchronous indexation in ElasticSearch

- a background job enqueued

- a timeout

If you don't wait properly for these things to finish, flakiness will bite you.

Asynchronous flakiness

The more asynchronous interactions there are, the more likely the test will be flaky.

Execution conditions

There are external conditions that can influence the way a test executes.

A series of events

We can see a test execution as a series of events. When these events happen in a specific order, the test passes. But external conditions can alter the order in which these events take place and result in a test failure.

Environment

Your laptop, your coworker's desktop computer and a CI cloud instance can have differences, such as:

- CPU

- Memory

- Disk I/O

- Networking I/O

- Operating System

- Dependencies versions (runtime, databases, etc.)

Each one of them can make a difference in the events order, because one environment will execute some tasks faster than another.

For example, a test might run faster on your local environment than on the CI, because your machine is much more powerful.

Resources

The test and all the components involved use and compete for available resources, such as:

- CPU

- Memory

- Networking I/O

- Disk I/O

The availability of these resources will never be exactly the same between two different test executions.

Your computer might have more programs running or a browser tab that uses your CPU.

The CI service might have "noisy neighbors" if some resources are shared with different clients or even with your coworkers.

The tests that have run previously, might also have left the system in a different resource usage state.

Tests execution order

note

Tests execution order changes over time.

You change the execution order when you:

- execute your tests in a random order (and you should)

- add or remove a test

- split the test suite into different parallel jobs to speed up the build time

Order flakiness

Tests that are dependent on execution order are more likely to be flaky.

A test can depend on execution order if:

- a previous test leaves the system in a bad state (database records, global or class variables, cache entries, cookies, files)

- it expects the system to be in a specific state, but does not set the state by itself

Examples of smells:

- using

before(:all)to create records in an Rspec test to speed up test execution - using class variables in the code under test

Best practice

As a rule of thumb:

- prepare the state for each test independently

- revert to a clean state after each test execution

- avoid using shared state between tests

Time and date

Each and every test execution happens at a different date/time. The code under test and the test code itself can sometimes depend on them.

caution

Tests that depend on date and/or time are more likely to be flaky.

Example of smells:

- asserting date/time without freezing it

- mixing methods that respect and don't respect timezones

Best practice

Always freeze time when testing time-dependent code.

Others

Other things can lead to flakiness.

Unordered collections

caution

Tests that assert collections that have an unspecified order are more likely to be flaky.

This typically happen when you test the result of a query that does not have an order statement.

Random data

caution

Tests use random data are more likely to be flaky.

You may generate random data in order the prepare your database state. A typical example is the use of Faker in your factories. When you do that, the random data might sometimes satisfy your test conditions, but sometimes trigger unexpected behaviors.